Haptics and Telerobotics

Over the past several decades, telerobotics has advanced from space and underwater exploration to mining, hazardous material handling, surgery, and even entertainment. By combining human intelligence with robotic precision, repeatability, and power, telerobotics enables human-like manipulation in environments that are inaccessible or dangerous.

In the IRiS Lab, we develop stability-guaranteed control methods, immersive haptic interfaces, and Physical AI technologies to transfer human contact-rich manipulation skills to robots. Our goal is to create stable, transparent, and high-performance haptics and telerobotics systems for applications ranging from industrial operations to medical procedures and beyond.

Control Methods for Stable Haptic Interaction

Researchers: Hyeonseok Choi, Jihan An

Haptics technology is to provide realistic and transparent feelings to the human. While rendering physical properties, such as stiffness, achieving stable interaction with virtual environments (VEs) is essential technology. Although stiffness is one of well-known, basic physical properties, stable interaction with high stiffness virtual environments (VEs) still remains a challenging issue for kinesthetic haptic devices. In particular, it has been recognized that the maximum achievable impedance with the traditional digital control loop is limited by the lack of information to the controller caused by time discretization, time-delay, position quantization, related to the use of encoder as a position sensor, and zero-order hold (ZOH) of force command during each servo cycle. These lead to energy leak and eventually instability if not dissipated through the intrinsic friction of the device, controller, or damping from the user’s grasp.

Our lab has proposed several approaches guaranteeing stability, well received in the research community, including, but not limited to, time-domain passivity approach (TDPA), input-to-state stable (ISS) approach, successive force augment (SFA) approach, successive stiffness increment (SSI) approach, and unidirectional virtual inertia (UVI) approach. As an ongoing research, we are trying to maximize the achievable impedance range of haptic and telerobotic systems towards highly transparent haptic interaction.

Associated Papers

- Relaxing Conservatism for Enhanced Impedance Range and Transparency in Haptic Interaction Link

- Chattering-Free Time Domain Passivity Approach Link

- Enhancing Contact Stability in Admittance-Type Haptic Interaction Using Bidirectional Time-Domain Passivity Control Link

- Error-Domain Conservativity Control to Transparently Increase the Stability Range of Time-Discretized Controllers Link

- Virtual Inertia as an Energy Dissipation Element for Haptic Interfaces Link

- Successive Stiffness Increment and Time Domain Passivity Approach for Stable and High Bandwidth Control of Series Elastic Actuator Link

- Time-domain passivity control of haptic interfaces Link

Shared Autonomy and Teleoperation

Researchers: Kwang-Hyun Lee, Donghyeon Kim

Teleoperation is a promising technology to control robots remotely in hazardous or extreme environments, such as space or construction sites. However, there are several limitations of direct teleoperation. First, it highly demands the operator’s physical and cognitive workloads. Second, it may require highly trained specialists. To address these issues, introducing different levels of autonomy supporting the human during a task execution is considerable. Virtual fixtures correspond to one of the lowest levels of autonomy, where human receives assistance with orienting the tool, to follow a path, or to avoid dangerous regions in the workspace. However, it is difficult to generate virtual fixtures in unstructured environments. Our lab works on interactive and intuitive methods for virtual fixture generation applicable in a wide range of teleoperation scenarios. In addition, we study methods for efficient use of various types of virtual fixtures (e.g., remote virtual fixture, local virtual fixture, etc.) and rendering methods depending on various teleoperation situations such as time delays and types of tasks.

Another way of teleoperating is to use almost full autonomy, where the human helps computer to solve complicated tasks, such as motion planning. Our lab has introduced an approach to record human intuition through a haptic device in order to reduce the complexity of path planning algorithm for cluttered environments. At IRiS lab we are exploring various levels of autonomy and human-robot interaction techniques to improve teleoperation.

Associated Papers

- Haptic Field and Force Feedback Generation for Wheeled Vehicle Teleoperation on 2.5D Environments Link

- Method for generating real-time interactive virtual fixture for shared teleoperation in unknown environments Link

- Simultaneous Use of Autonomy Guidance Haptic Feedback and Obstacle Avoiding Force Feedback for Mobile Robot Teleoperation Link

Skill Transfer Through Teleoperation

Researchers: Kwang-Hyun Lee, DongHyeon Kim, Seong-Su Park

A huge advancement in AI brings a giant wave of robot learning to train robots that physically can interact with the world, so called physical AI. While reinforcement learning (RL), vision-language-action (VLA), large behavior model (LBM) require a bunch of large datasets to train physical AI models, learning from demonstration (LfD) is a promising method to train robot autonomies fast and efficiently with smaller datasets, full of human’s skillful motions.

Research focuses of our lab in LfD is retrieving and learning pure human motions and intentions by using teleoperation. Our immersive and realistic haptic-based teleoperation system allows human focus on providing bare-handed task motion utilizing multi-modal senses, such as vision, force or tactile feedback.

Associated Papers

- Is a Simulation better than Teleoperation for Acquiring Human Manipulation Skill Data? Link

- Learning Robotic Rotational Manipulation Skill from Bilateral Teleoperation Link

- Mini-Batched Online Incremental Learning Through Supervisory Teleoperation with Kinesthetic Coupling Link

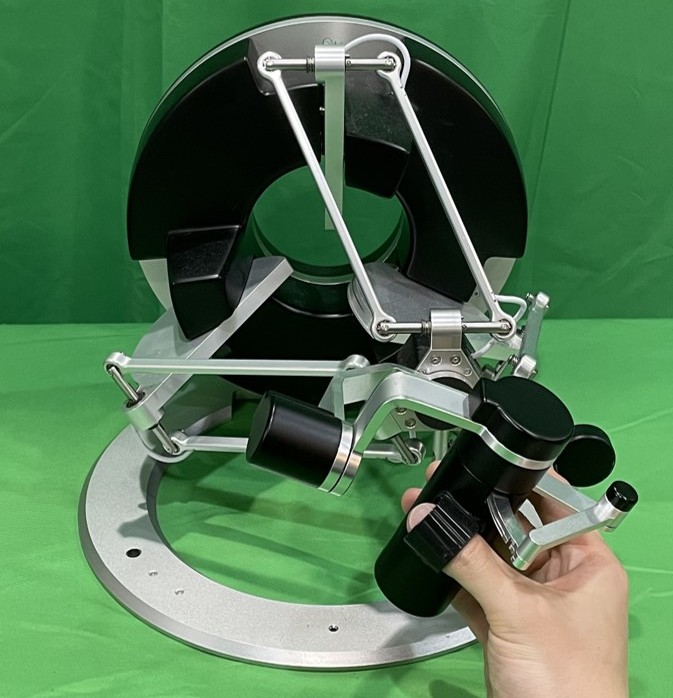

Haptic Device for Intuitive Teleoperation

Researchers: Joong-Ku Lee, Hyeonseok Choi

Lack of situational awareness and hand-eye coordination mismatch make teleoperation a challenge. Numerous research efforts have been made to resolve these issues. However, most of the previous research used conventional general master interfaces, such as Phantom, Omega, or Virtuose, which have limited capabilities of increasing intuitiveness. In IRiS Lab, we have been developing new types of task- or remote robot-specific haptic human interfaces to further enhance the intuitiveness with improved situational awareness and intrinsic hand-eye coordination matching. As an initial trial of this concept, we are developing a multi DoFs manipulator-based haptic device for intuitive bilateral teleoperation tasks and modulized 3 DoFs torque feedback devices which can be easily attached to the tip of the commercial haptic devices.

Associated Papers

- CoaxHaptics-3RRR: A Novel Mechanically Overdetermined Haptic Interaction Device Based on a Spherical Parallel Mechanism Link

- Model-Free Energy-Based Friction Compensation for Industrial Collaborative Robots as Haptic Displays Link

- Optimizing Setup Configuration of a Collaborative Robot Arm-Based Bimanual Haptic Display for Enhanced Performance Link

Equipment

Manipulators

- 4x Panda, Franka Emika (7-DOF)

- 2x UR16e, Universal Robot (6-DOF)

- UR5, Universal Robot (6-DOF)

- xArm 6, UFACTORY (6-DOF)

Haptic Gloves & Robotic Hands

- Nova, SenseGlove

- ROH-AP001, ROHand (Tactile Sensing)

Haptic Devices

- Omega. 7, Force Dimension (1ea / Parallel Type)

- Phantom Premium 1.5 (1ea / Serial Type)

HMDs

- VIVE XR Elite, HTC Vive (1ea)

- VIVE Focus 3, HTC Vive (1ea)

- VIVE Pro, HTC Vive (1ea)

Sensors

- 4x ATI, Axia80 (6-DOF F/T Sensor)

- 2x Basler ace 2-a2A3840, Basler (RGB / 8.3MP resolution)

- XR44, Xela Robotics (Magnetic Type Tactile Sensor)

GPU Computing

- 2x A6000, NVIDA

- 4x RTX 4090, NVIDA